Have you ever wondered how your brain is so good at learning new things? Like when you first tried riding a bike and fell off, but with practice, you got better and better? Or, when you were learning how to parallel park and thought it was impossible, but now it's a piece of cake? The secret is in the billions of neurons and connections in your brain. The human brain has eighty-six billion neurons and many, many more interconnections. Every time you learn something new, your brain forms new connections between neurons. The more you practise, the stronger those connections become. When you learn something new, only a certain group of these neurons get activated. As you keep practising, the connections between those neurons get stronger and it becomes easier for your brain to do that thing. That's why they say, "practice makes perfect!"

Computers can learn in a similar way through a process called deep learning. These computer models are exposed to lots of data which is then compared to expected outcomes to refine their "thinking." It's like the way your brain learns from experience through something called synaptic plasticity. So, just like our brains, these machines can get really good at what they do with practice!

The beginning of everything

In 1943, researchers Warren McCulloch and Walter Pitts published a paper titled "A Logical Calculus of Ideas Immanent in Nervous Activity" which described a model of how neurons in the brain work together to process information. This paper proposed the idea of artificial neural networks; computer programs that will simulate the behaviour of neurons in the brain. However, it wasn’t until the 1980s that deep learning progress has been made mainly because of the invention of backpropagation algorithm (explained a bit later) which enabled multi-layer neural networks to be trained.

Deep learning, being a subset of Machine Learning, is sometimes hard to distinguish. One of its major differences is the amount of data and computing power it requires. Machine Learning can work with smaller datasets and less powerful computers, while deep Learning requires larger datasets and more powerful hardware. This is why it wasn’t until the 1990s, with the rise of big data and improved computational power, that deep Learning started to flourish.

In recent years, deep learning has witnessed significant advancements with the introduction of new algorithms such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs) and Generative Adversarial Networks (GANs).

The technical side

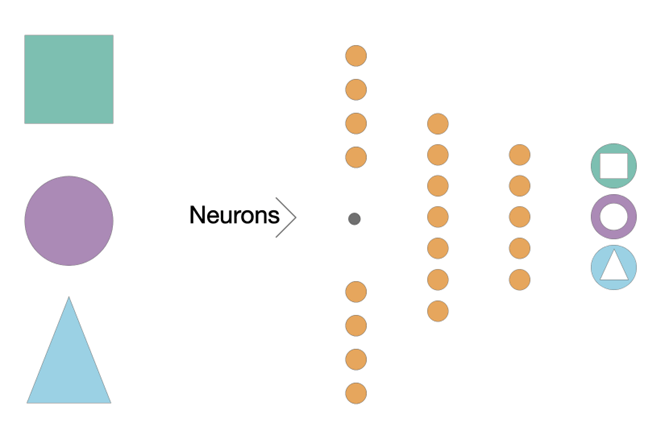

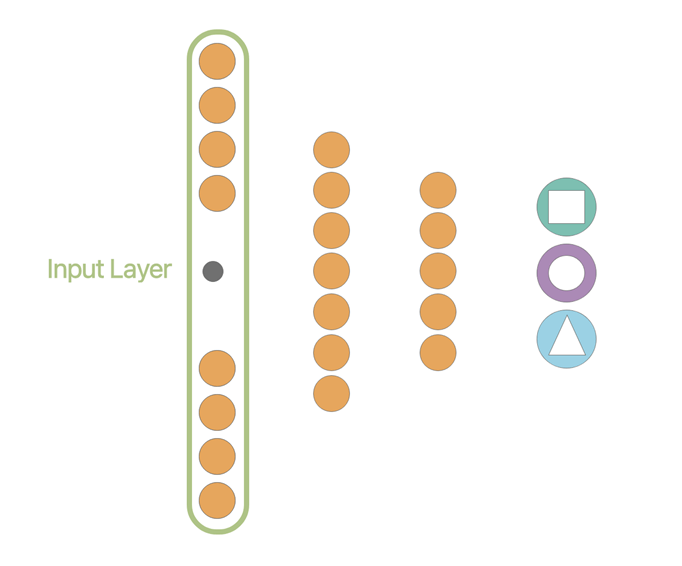

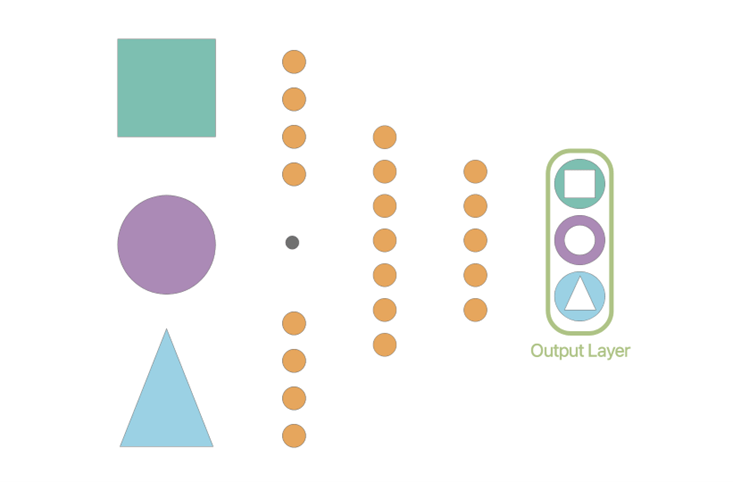

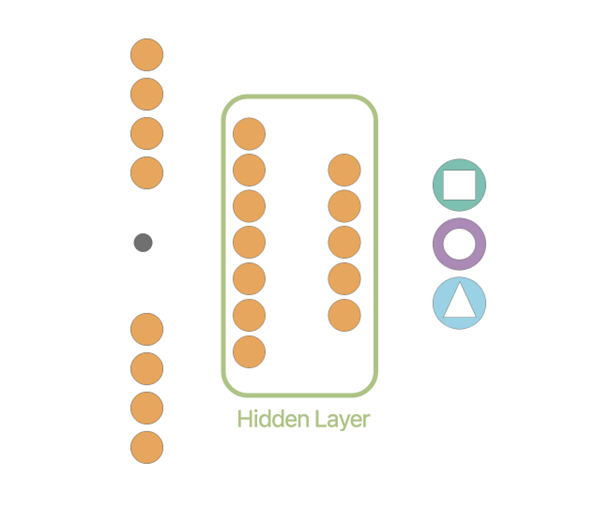

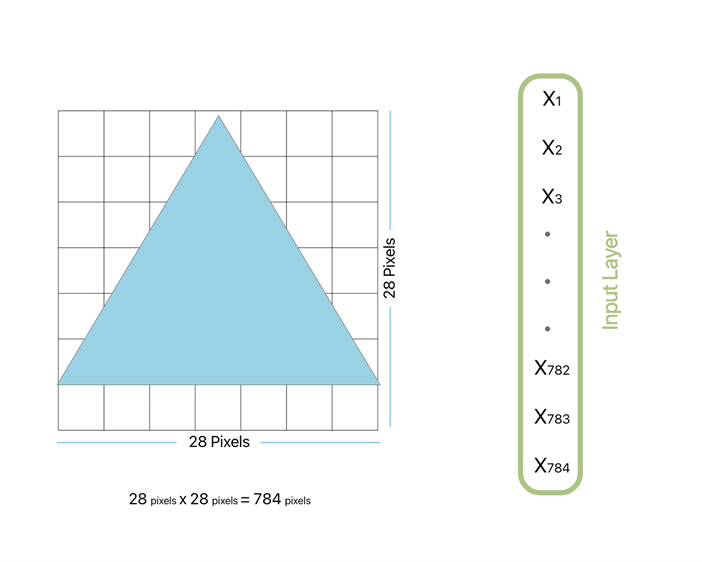

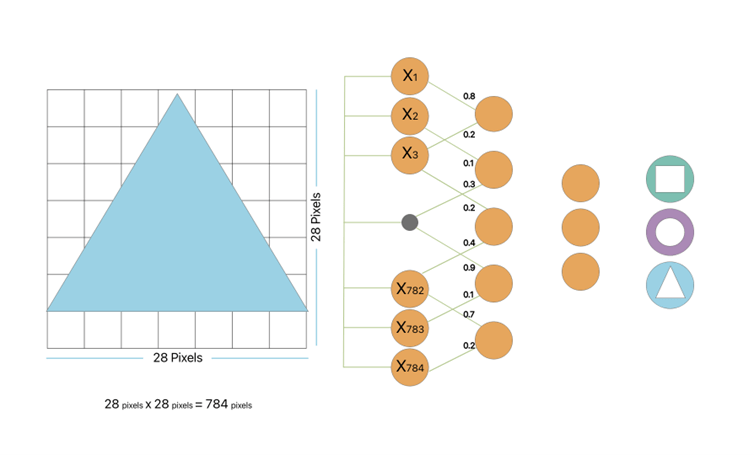

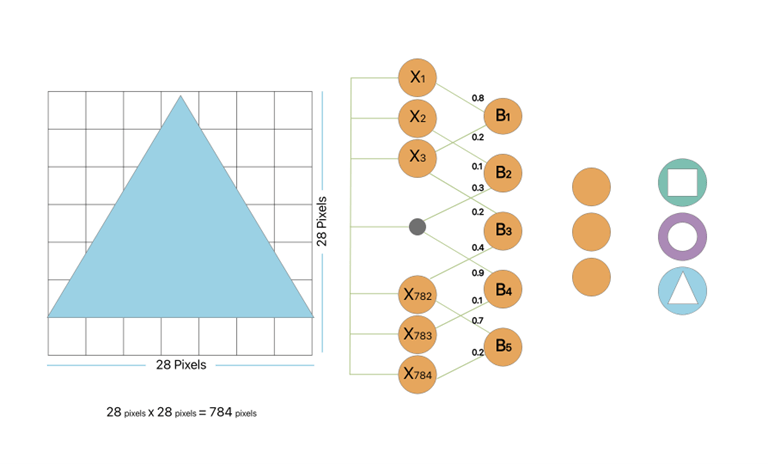

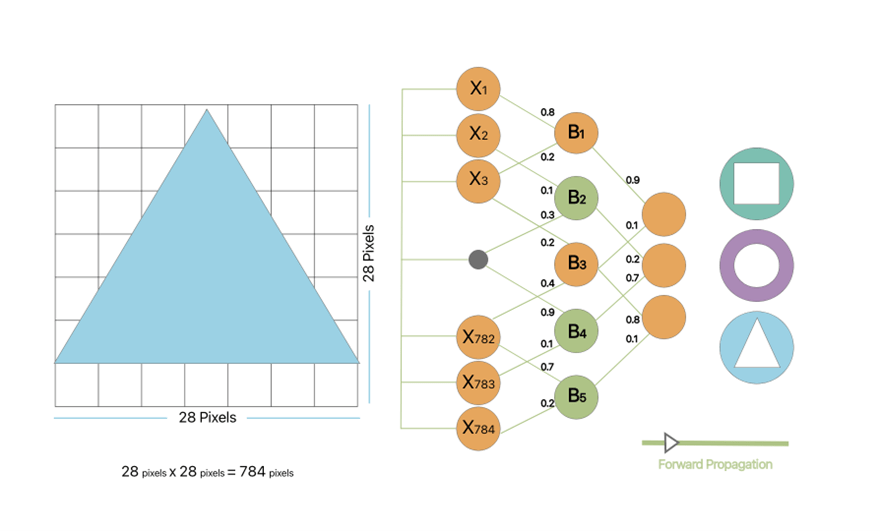

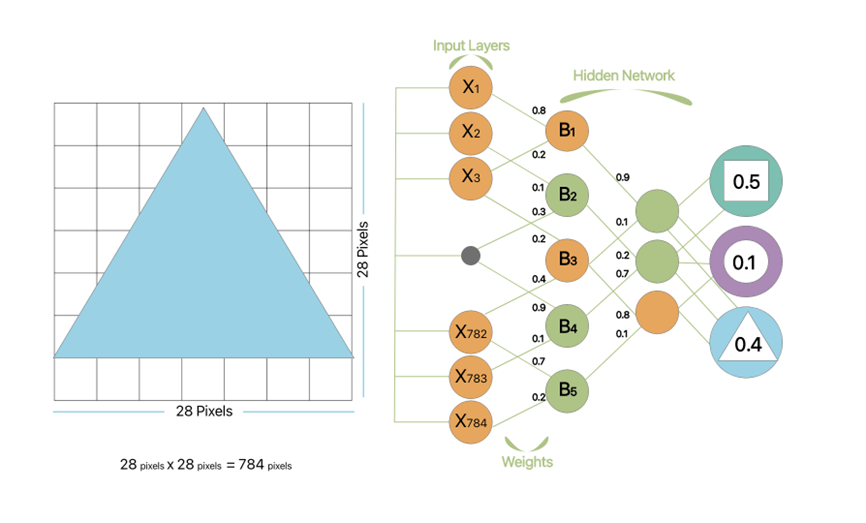

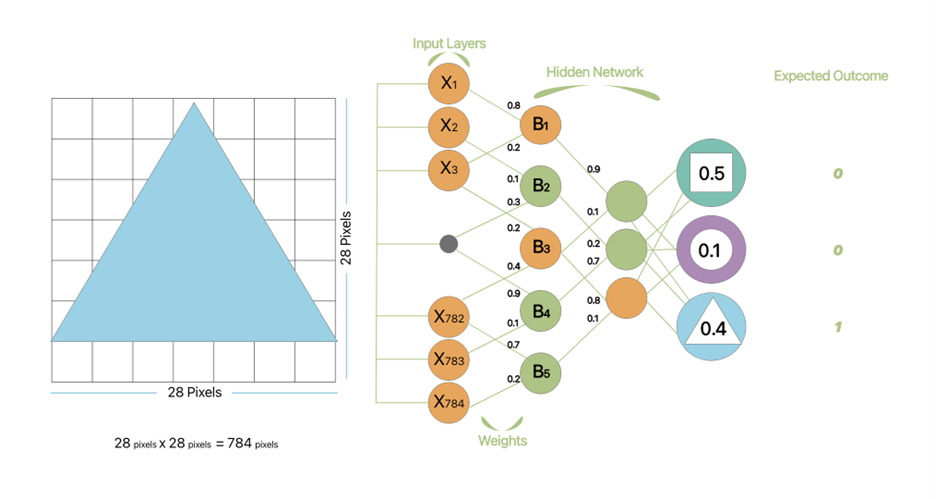

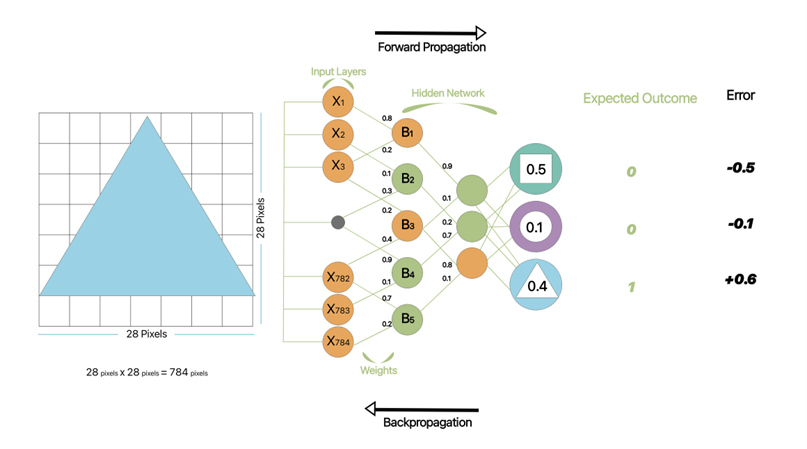

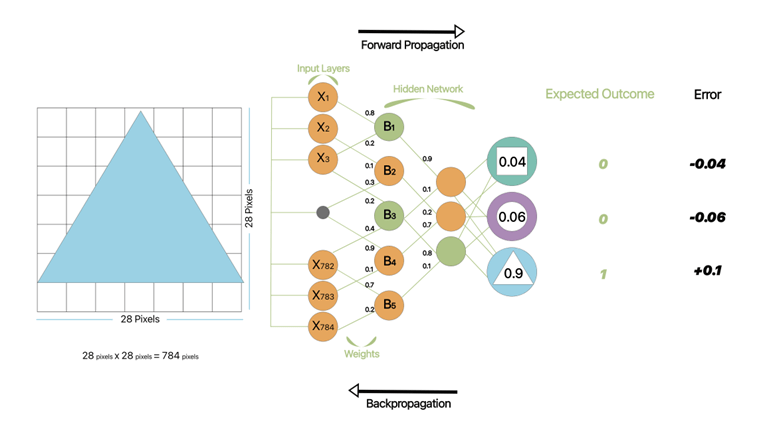

Trying to explain deep learning is definitely not an easy task, so let’s break it down to an example of a machine trying to guess what type of shape it is provided.

Let’s say we have three types of shapes, a triangle, a circle, and a rectangle.

(X1 * 0.8 + X3 *0.2)

Each of these neurons is associated with a numerical value called the bias which is then added to the input sum.

(X1 * 0.8 + X3 *0.2) + B1

Over time, as more shapes are fed into the neural network, it will become better and better at recognizing and classifying them. It can even start to recognize new shapes that it has never seen before, by using the patterns and features that it has learned from previous examples.

Applications of deep learning

Deep learning isn't just for recognizing shapes though – it has the potential to do some really remarkable things. For example, imagine if you could teach a computer to understand and speak your language, just like your friend does. That's exactly what deep learning does in natural language processing (NLP). By analysing patterns and structures in language, deep learning algorithms can translate, summarise, or even write new text in a way that sounds just like a human.

Deep learning is also great at recognizing and transcribing speech. Just like how you can understand what someone is saying even if they have an accent or are speaking softly, deep learning models can learn to recognize and transcribe spoken words with incredible accuracy, even in noisy or challenging environments.

| Go; an ancient Chinese board game, is famously difficult for computers to play because there are so many possible moves. But in 2017, a deep learning algorithm called AlphaZero changed the game. By teaching itself to play and learning from its own mistakes and successes, it started to learn which moves are good and which ones are bad. And because it's using deep learning techniques, it's able to recognize patterns and strategies that no human player has ever thought of before. Over time, AlphaZero became an expert at playing Go, and it's now able to beat even the best human players. It's an incredible demonstration of the power of deep learning - and it shows that there are still so many amazing things that we can learn from these algorithms. |

Training a deep learning model to understand language or recognize speech can be a very time-consuming and expensive process, requiring huge amounts of data and computing resources. That's where transfer learning and pre-trained models come in.

Transfer learning is like taking the knowledge that you've learned in one area and applying it to another area. With deep learning, transfer learning involves using a pre-trained model - a model that's already been trained on a massive amount of data - and adapting it to a new task.

For example, let's say you want to train a deep learning model to understand the language of a specific industry, like medicine. Instead of starting from scratch, you could use a pre-trained model that already understands the basics of language, and then fine-tune it on medical terminology and context. This approach can save a lot of time and resources, while still achieving great results.

Pre-trained models are also used in other areas, like computer vision. There are pre-trained models that can recognize objects in images, identify faces, or even detect emotions. By using these pre-trained models as a starting point, researchers and developers can build more advanced applications that can solve real-world problems.

There are many pre-trained deep learning models, each used for different purposes.

| Type | Applied real-case |

| Recurrent Neural Networks (RNNs) are used for processing sequential data, like text or speech. They have loops that allow information to persist, making them great at understanding context and making predictions based on it. For example, RNNs are used in natural language processing to predict the next word in a sentence based on the previous words. | Financial institutions use RNNs to analyse time-series data such as stock prices and predict future market trends. RNNs can also be used for fraud detection by identifying patterns in transaction data and flagging suspicious activity. |

| Convolutional Neural Networks (CNNs) are great at processing images and video. They use filters that detect patterns and features in images, making them great at tasks like image classification, object detection, and image segmentation. | CNNs are commonly used in the field of computer vision, such as in self-driving cars. CNNs can help identify objects on the road, read street signs, and even recognize other cars. They can also be used for medical image analysis, such as detecting tumours or identifying abnormalities in X-rays. |

Generative deep learning models, like Generative Adversarial Networks (GANs) and Generative Pre-trained Transformers (GPT), which are currently on the rise, are used for creating new content.

| Type | Applied real-case |

| Generative Adversarial Networks (GANs) can create new images, videos, or even music by generating new data that resembles the original training data. | Clothing companies can use GANs to generate new clothing designs and predict which designs will be most popular with consumers. GANs can also be used to create realistic images for advertising or movies. |

| Generative Pre-trained Transformers (GPT) is great at generating new text that sounds like it was written by a human. These models are used in various creative fields, like art and music, as well as in fields like language translation and content creation. | GPTs are used in natural language processing, such as in virtual assistants like Siri or Alexa. They can also be used for language translation, text generation, and even writing news articles. GPTs have also been used to generate realistic chatbot conversations that can answer customer questions and provide assistance. |

Looking into the future, there are several exciting developments in deep learning that could have significant impacts on various industries. One of the most promising areas of development is in the field of reinforcement learning as explained simply in ML: What is it really, which involves training an agent to interact with an environment and learn through trial and error. Reinforcement learning has already been used to create impressive results in fields like robotics, gaming, and self-driving cars, and we can expect to see even more advancements in these areas in the coming years.

One of the greater challenges that deep learning faces is the massive amounts of data required to train models effectively. With the exponential growth in data, the current computing power may not be sufficient to handle the increasing demands of deep learning. Quantum computing, with its ability to process large amounts of data simultaneously, could greatly accelerate the training of deep learning models and enable more complex computations. While the field of quantum computing is still in its early stages, researchers are already exploring its potential applications in deep learning and machine learning.

Deep Learning has come a long way since its inception. It has already revolutionised various industries and will continue to do so in the future. With the continuous progress in research, we can expect increasingly powerful and efficient models that can predict natural disasters like earthquakes, optimise stock market investments, or even provide personalised medical treatments. From improving our daily lives to tackling global challenges, the possibilities for deep learning are endless.

The future of deep learning is truly exciting, and we can't wait to see where it takes us.